Overview

This solution is a robust and scalable backend architecture built for high-throughput data ingestion, analytics, and full-text search. To support long-term analytical needs and reduce reliance on Elasticsearch, it includes automated migration scripts and infrastructure to efficiently transfer and normalize data into ClickHouse.

With built-in support for auto-scaling, read replicas, and CDN delivery, the platform aims to achieve 99.9 percent uptime, a 50 percent reduction in API response times, and a 40 percent decrease in infrastructure costs. This enhances developer efficiency and delivers a high-quality user experience.

Problem

Modern industries like social media platforms generate massive volumes of user-generated content and metadata every hour. Businesses that rely on this data for marketing, analytics, or influencer intelligence face significant challenges:

- Ingesting and processing hundreds of thousands of profiles and updates in near real-time.

- Running complex filters and full-text searches with high accuracy and speed.

- Aggregating and analyzing time-series data across millions of data points.

- Managing data across multiple storage systems (e.g., Elasticsearch, ClickHouse) without consistency issues or performance bottlenecks.

- Scaling infrastructure efficiently without over-provisioning or driving up costs.

- Ensuring reliability and fault tolerance while maintaining fast developer iteration cycles.

Without a unified, scalable architecture, teams struggle with rising infrastructure costs, slow response times, data inconsistencies, and poor user experience.

Solution

The solution uses a modular open source Node.js and TypeScript backend module to manage seamless migration from Elasticsearch to ClickHouse, enabling long-term and cost-effective analytical storage while maintaining high performance. It separates real-time search, handled by Elasticsearch, from analytical workloads, handled by ClickHouse, allowing each system to operate at its best. Automated migration scripts ensure large datasets are normalized and transferred with data integrity and schema consistency maintained.

This way your backend can be built for high-throughput data ingestion, using batching, compression, and the efficient columnar storage format of ClickHouse which in a nutshell supports horizontal scaling with distributed nodes and read replicas. That's what allows and ensures low-latency queries even during heavy traffic. Designed for integration with Big Data systems, you can start working with any APIs ready for large-scale use and support full-text search, aggregation, and filtering while significantly lowering infrastructure costs and improving long-term analytics.

Features

Automated Data Migration

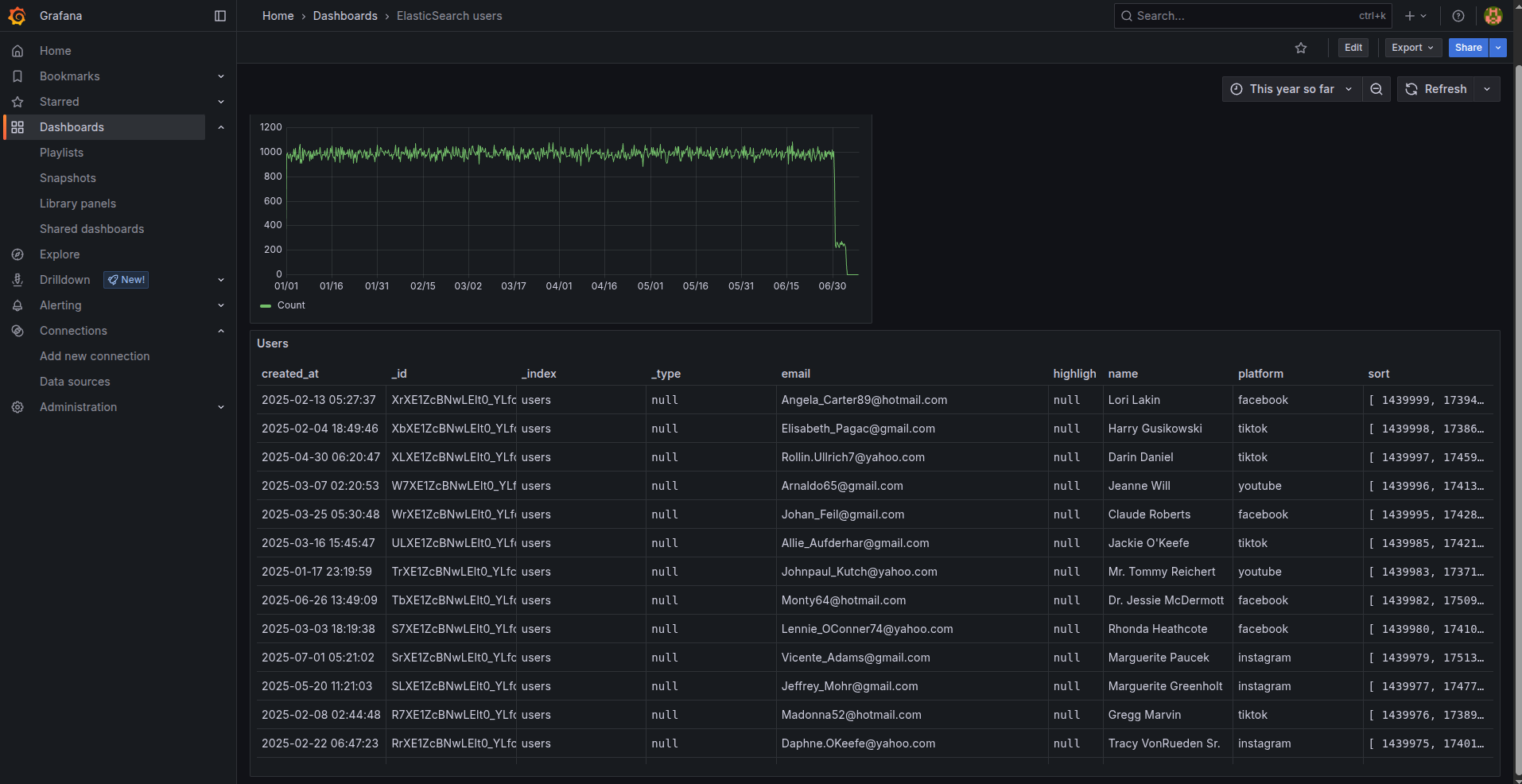

Grafana Dashboards

Winston Logging Integration

Benefits

Improved Performance for Analytical Queries

Lower Infrastructure Costs

Real-Time & Historical Insights

Future-Proof Approach

Gallery

Video

Questions & Answers

Why migrate from Elasticsearch to ClickHouse for analytics?

Does this solution eliminate Elasticsearch completely?

How is data consistency maintained during migration?

Is this solution suitable for real-time analytics?

Is This the Right Solution for You?

and we will contact you soon to discuss further details.

.png)

.png)

.png)

.png)